by Brendan Clarey

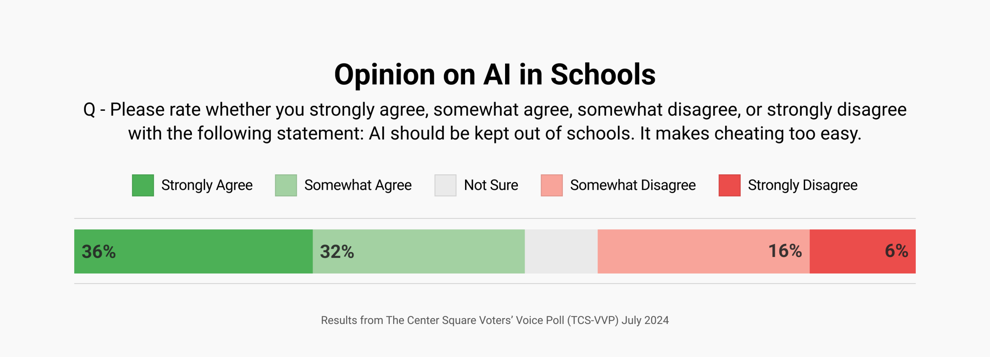

The majority of likely voters say artificial intelligence shouldn’t be in schools because it makes it too easy to cheat, new poll results show.

The Center Square Voter’s Voice Poll conducted by Noble Predictive Insights found that over two-thirds of likely voters say they think AI should stay out of schools.

The recent poll of nearly 2,300 likely voters found that 68% agreed with the statement that “AI should be kept out of schools” because “it makes cheating too easy.” Only 22% were in favor of keeping AI in schools with the rest saying they were not sure.

The poll from July 8-11 included 1,006 Republicans, 1,117 Democrats, and 172 true (non-leaning) independents. It has a margin of error of 2.1%. The Center Square Voters’ Voice Poll is one of only six national tracking polls in the United States.

According to David Byler, chief of research at Noble Predictive Insights, public attitudes on artificial intelligence have changed over time as its limitations and dangers came to light according to his firm’s poll.

The number of likely voters signaling concerns held steady among parents, too. Sixty-nine percent of respondents with children under 18 said they thought AI shouldn’t be in schools. Byler said that’s because they are aware of the risks.

“We’re seeing high levels of concern amongst parents of children under 18 about AI cheating,” Byler said. “This is exactly what you would expect if you hadn’t seen an inch of data.”

Byler said it is natural for parents to feel highly concerned about their students’ education, which will look very different from their own due to the advances in technology.

“A lot of parents that want their kids not only to be able to think but to produce good quality products that will get them ahead in the work world are going to be very concerned about AI cheating,” Byler said.

The poll’s question on AI in schools was part of a broader query into how voters feel about the nascent technology. According to Byler, the public has caught up to the hype initially surrounding the advances.

“What we’re seeing here broadly in the AI questions is the public catching up to the technology,” Byler said. “When AI technologies were initially released and then they became very widespread. Suddenly, you had a lot of anecdotes piling up about professors who suddenly saw students writing these long, flowery essays that were on the wrong topic or cited the wrong sources.”

“You saw students using these before teachers had the chance to catch up initially,” Byler said. “You also saw a lot of people attempting to use AI in business settings but then falling victim to costly hallucinations.”

Hallucinations are when generative AI programs create content with inaccuracies that still sound plausible. Programs like ChatGPT are based on large language models, which use source text to create conversational responses to user prompts. Those source texts are not always accurate.

“So essentially, what you found was a flood of anecdotes about AI in schools, about students using this to get around homework assignments, turning in suspicious or incorrect work,” Byler said.

“People are worried that AI will allow for cheating without having any comparable benefit,” Byler said. “People are starting to say, ‘This is not just benefit’ or ‘This is not just god-like power. This thing has weaknesses and problems inherent to it.’”

“We at this moment have equilibrated where the average person has seen AI and what it is capable of as well as some of the mistakes and are now sort of backing off from the hype a little bit,” Byler said.

– – –

Brendan Clarey is a contributor to The Center Square.